QRI’s research lineages include viewing the brain as a self-organising system. This tradition involves moving away from accounts of functional localisation, on the ground that localised functions may be artefacts of the tools we are using to look at the brain, rather than phenomena emerging from the brain’s underlying structure. Curious about this historical background, I endeavoured into the literature, reading how different neuroscientists attempted to measure and interpret information content in the brain.

In the 1960s, the predominant doctrine in psychology was the behaviourist belief that science can only measure stimuli and responses but not internal states. In reaction to this stance, cognitive psychology emerged with the ambition of understanding the mental processes that produce behaviour. By the late 1970s, some cognitive psychologists had started studying the effects of brain damage on cognitive functions, trying to uncover the neural basis of mental processes. Their work led to the birth of cognitive neuroscience (Passingham 2016). During the following decades, cognitive neuroscience benefited from an outstanding development of techniques to measure and analyse brain activity of both healthy and impaired humans.

Among other factors, the shift from behaviourism to cognitive psychology was encouraged by the co-occurring onset of the information age. In the 1940s, the increasingly sophisticated study of logic gates led to the realisation that information could be defined and quantified. Shannon’s first definition of information (Shannon 1948) laid the foundations for the quantitative study of information. Under the framework of information theory, cognitive neuroscience aims to leverage brain imaging techniques to measure information in the brain (de-Wit et al. 2016).

However, to properly contextualise whether cognitive neuroscience can measure brain information, we need to revisit what information means. (Shannon 1948)’s original formulation of information theory provides an explicit definition of information: how much a message transmitted through a noisy channel reduces uncertainty for the receiver of the message. Shannon’s definition requires several elements: a transmitter, a message, a channel, a receiver and noise. It is important to note that all these elements are context-dependent. For example, when recording speech, the audio data other than the speaker’s voice is considered noise. Differently, when recording live music, we consider the spoken words of the audience as noise and we try to filter them out. Defining who or what is the receiver of a message significantly shapes what we mean by information.

In the context of brain activity, (de-Wit et al. 2016) argue that different definitions of the receiver of information lead to different measurement paradigms. They argue that “much modern cognitive neuroscience focuses on interpreting physical signals in the brain from the perspective of the external experimenter as the receiver of information” (de-Wit et al. 2016). Many neuroscientific studies interpret the correlation between activity in the brain and a given perceptual stimuli or behavioural response as the discovery of “the neural representation of X” or “the neural code underlying Y”. These interpretations are from the implicit perspective of the experimenter measuring both phenomena and labelling them as related (i.e., experimenter-as-receiver). However, (de-Wit et al. 2016) further argue that “information is not a static property inherent to a physical response and that it is only when physical responses can be shown to be used by the brain that we have positive evidence that a physical signal acts as information”.

In contrast, (de-Wit et al. 2016) propose the paradigm of the cortex-as-receiver. They argue that brain information refers only to physical signals that are actually employed by the brain to coordinate its functioning and call for experimental designs aimed at showing how measured brain activity is used by other brain areas. The argument of (de-Wit et al. 2016) leads to a model in which the brain, except for external stimuli, both generates and receives the information that exists within it. This model is known as a self-organising system. Recent evidence from computational neuroscience suggests that the brain exhibits many properties of self-organising systems, such as modular connectivity, unsupervised learning, adaptive ability, functional resilience and plasticity, local-to-global functional organisation and dynamic system growth (Dresp-Langley 2020). Under the framework of the brain as a dynamic self-organising system, we can define information as the patterns of activity that implement the self-organising principles that the brain uses to function. As a side note, it is important to point out that this definition does not comment on whether the information is conscious or not. Related to this, a further consideration may distinguish between information encoding and rendering. There may be something unique that happens when the brain chooses to explicitly render information as perception in a coherent scene, rather than merely acting on it implicitly. Further research may seek to distinguish between these cases, but is beyond the scope here.

Using EEG noise to deny free will

Brain waves, also known as brain oscillations, consist of the synchronised firing of populations of neurons across the brain (Steriade and Deschenes 1984). They are neural rhythmic activities generated from within (Buzsáki 2006). Since the 1920s, neuroscientists have been measuring brain oscillations by recording the brain’s electromagnetic field inside the skull through electrocorticogram (ECoG) or outside it via electroencephalogram (EEG) and magnetoencephalogram (MEG).

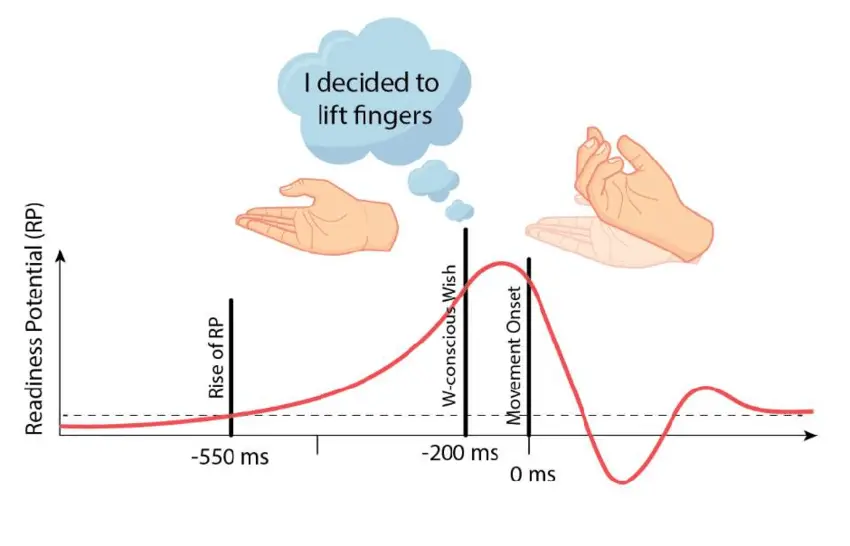

In the 1960s, while investigating self-initiated action in the brain, two German neurologists discovered a peak of electrical activity in EEG readings occurring shortly before a self-initiated movement of the right index finger. (Kornhuber and Deecke 1965) labelled the pattern as readiness potential (RP).

A few decades later, Benjamin Libet, a pioneer of consciousness research, devised an experiment to measure the readiness potential and its relation to conscious awareness of a willful desire to move. Libet’s famous result showed that the readiness potential occurred as long as 300ms before subjects reported awareness of a conscious will to act (Libet et al. 1983).

During the past few decades, the result of Libet’s experiment has been widely discussed across society. Conservative interpretations of the result associated it with unconscious processing (McGilchrist 2019). Several philosophers and journalists cited Libet’s experiment as evidence that people’s choices result from unknown mechanisms outside of our control, denying the existence of free will (Cave 2016; Harris 2012; Hogenboom and Pirak 2020; Mele 2018). Neuroscientists replicated the results of Libet’s experiment using publicly available data (Dominik et al. 2018) and more modern techniques like functional magnetic resonance imaging (Soon et al. 2008).

Critics of Libet’s experiment argued that people might not be able to accurately record the precise moment of their decision to move (Danquah, Farrell, and O’Boyle 2008) or that the readiness potential could relate to directing attention to the wrist, the button to press or even imagining to move. However, until recently, most observers of the results did not question the assumption that the readiness potential encoded information actually related to the subsequent spontaneous movement (Trevena and Miller 2010).

Finally, in 2012, Schurger proposed a plausible explanation of Libet’s result that refuted the implicit assumption of a relationship between the readiness potential and spontaneous movement. (Schurger, Sitt, and Dehaene 2012) argued that when humans are instructed to produce movement without any specific temporal cue, the precise moment at which movement happens is “determined by spontaneous sub-thresholds fluctuations in neuronal activity”. In other words, when we are instructed to move purposelessly, the brain may employ noisy activity in the accumulation of signals required to avoid endless indecision. (Schurger, Sitt, and Dehaene 2012) demonstrated they could explain the EEG data recorded during Libet’s experiment using a leaky stochastic accumulation model, a computational framework that simulates decision-making processes, incorporating randomness and a tendency for accumulation of evidence over time (Usher and McClelland 2001). Additional empirical evidence supported the possibility that the readiness potential may be just noise that the brain leverages in a symmetry-breaking way (Maoz et al. 2013).

In summary, while the account provided by (Schurger, Sitt, and Dehaene 2012) is only one alternative explanation, the controversial history of the readiness potential shows how challenging it is to correctly interpret neural correlates of experimental phenomena. Similarly to Libet’s experiment, in the past few decades many neuroscience studies leveraged experimental correlations to propose explanatory accounts of cognitive functions that turned out to be spurious or not generalizable (Poldrack et al. 2017).

Uncovering stimuli information within brain waves

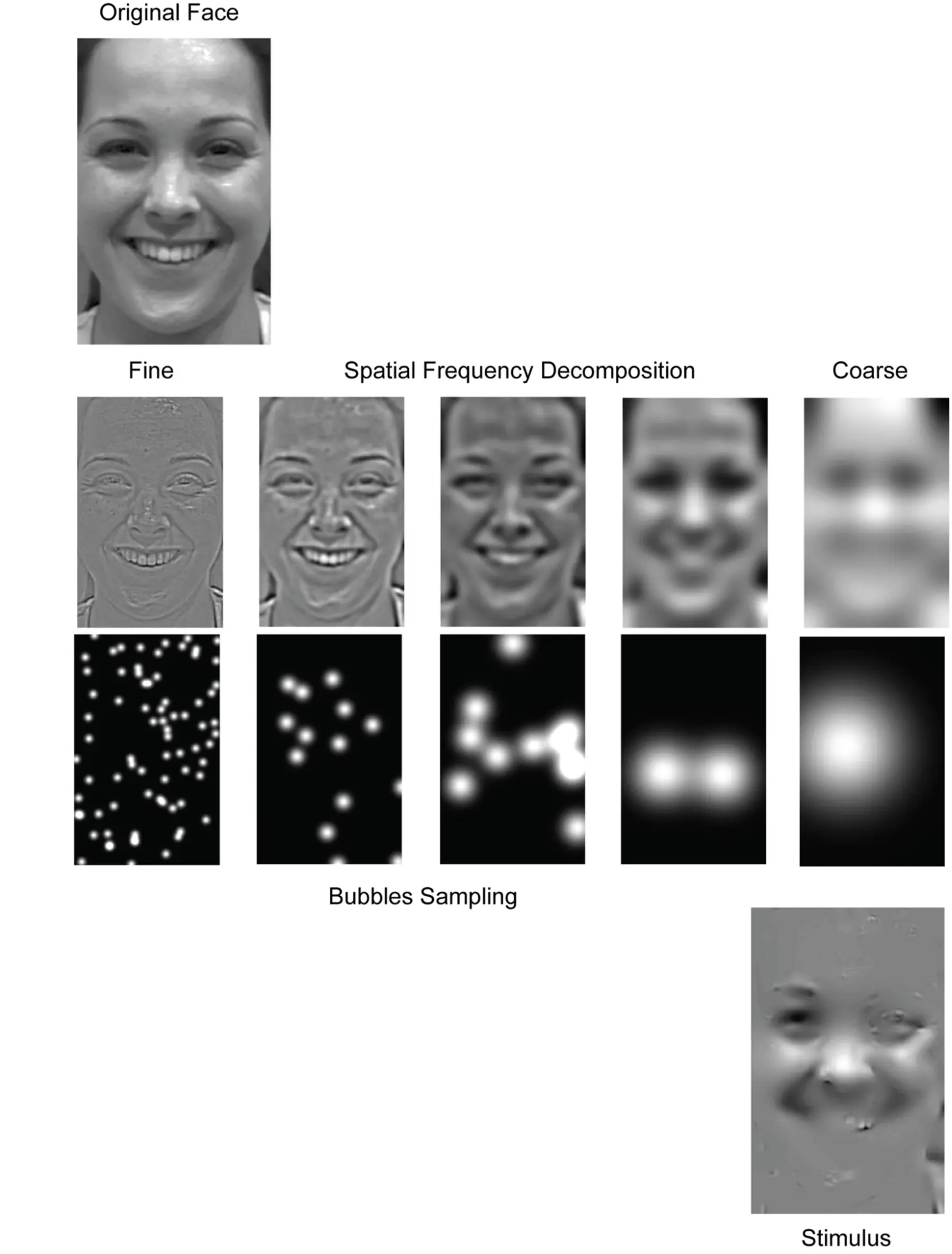

Responding to misinterpretations of brain activations, researchers have recently been working on more precise ways of identifying how the brain encodes information contained in the stimuli it is exposed to. More specifically, the framework of “information-based functional brain mapping” proposed to move from identifying brain regions whose average activity changes across experimental conditions to identifying brain activations that change in the same quantitative way as the experimental stimuli (Kriegeskorte, Goebel, and Bandettini 2006). In practice, researchers have been introducing variability into the stimuli and analysing how the added variability relates to brain waves and behaviour. An exemplar study designed under this paradigm is titled “Cracking the Code of Oscillatory Activity” (Schyns, Thut, and Gross 2011). In this work, Schyns and his colleagues controlled visual information by carefully sampling images of facial expressions using “bubbles” of different sizes for different spatial frequencies. By relating variability in the stimuli with variability in how people categorise emotions and EEG responses, they inquired how different properties of brain waves encode information. More specifically, they analysed the relationships between the stimuli and the wave power and phase.

While most previous studies ignored the role of the wave phase in encoding information, (Schyns, Thut, and Gross 2011) showed that (a) most information about face expression categorisation resides in the conjunction of both phase and power; (b) phase and power codes task-relevant features of the stimuli and (c) that such information is simultaneously encoded through different signals across oscillatory frequencies, with wave phase prominently encoding facial features.

How do these findings fit within the different definitions of information we discussed in this essay? It appears that the work of (Schyns, Thut, and Gross 2011) focuses on specific brain phenomena and regions as the receiver of information from the external environment. As such, quantifying representations using information theory zooms in on how the brain encodes external information and provides useful insights about sensory processing. However, it is challenging to extend this approach to multi-region high-dimensional inquiries and scale it to investigate how the brain processes information as a self-organising system. This is not to say that information theory cannot provide insights about representational interaction, as demonstrated by recent work from (Ince et al. 2016) showing cross-hemisphere representational transfer and the time course of eye coding. Nonetheless, their findings, like those of most studies following this framework, appear to be limited in scope and scale and do not offer integrated accounts of whole-brain functioning.

Brain waves and self-organising principles

When viewing the brain as a complex dynamic system, researchers investigate the fundamental principles underneath the behaviour of brain oscillations. Recent work in computational neuroscience attempted to advance knowledge in this direction.

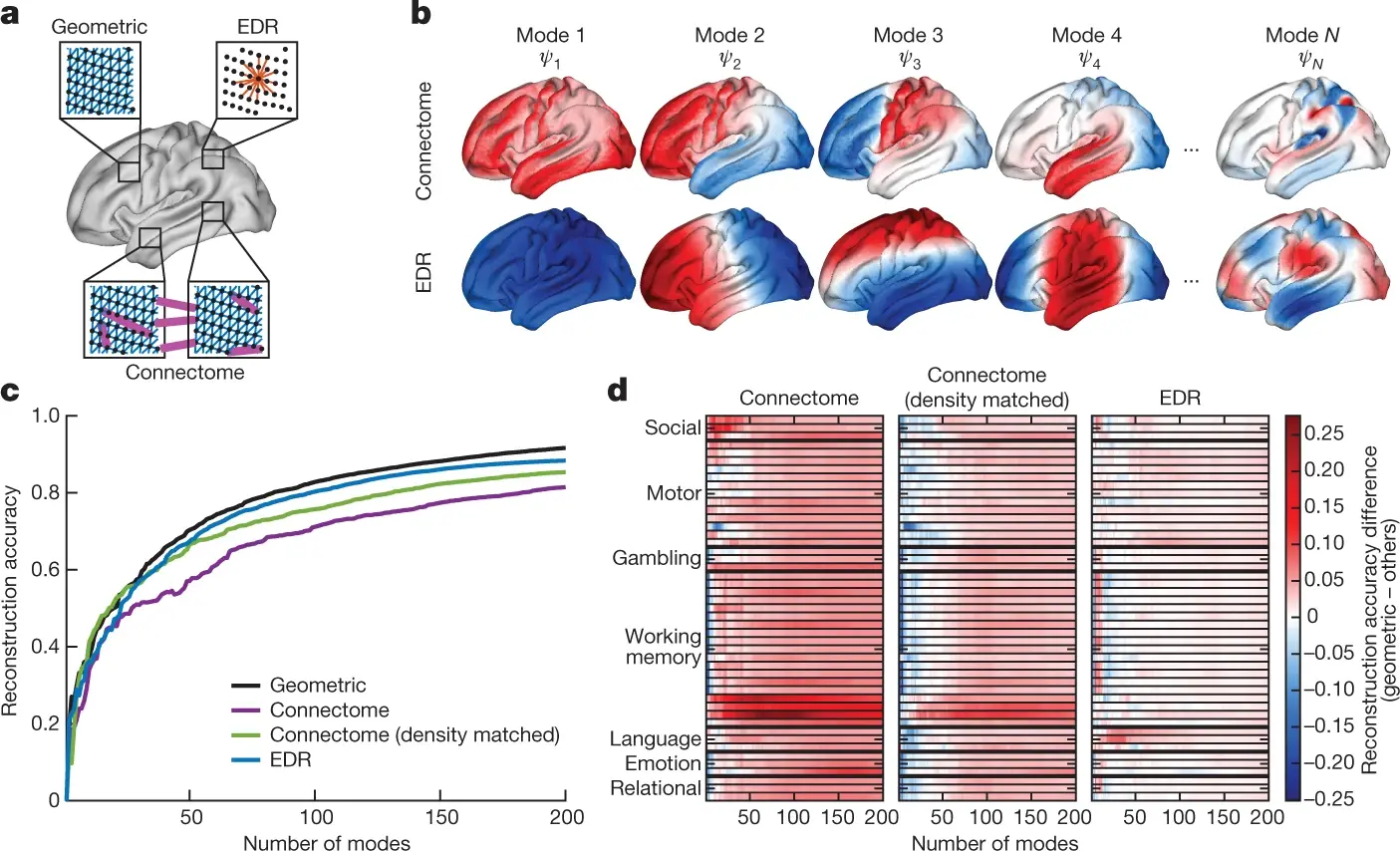

(Atasoy, Donnelly, and Pearson 2016) proposed the concept of connectome-specific harmonic waves (CSHW), a method of leveraging magnetic resonance and diffusion tensor imaging to calculate standing wave patterns that may naturally generate within the brain and distribute information (i.e. harmonic waves). In the CSHW framework, the standing wave patterns are derived by calculating the eigenvectors of the human connectome (i.e. the graph of both local and long-range cortical and thalamic connections). Notably, other fields of science showed that eigendecomposition is at the basis of many self-organising patterns such as in metal plates (Ullmann 2007), mammalian coat patterns (Murray 1988) and electron orbits (Schrödinger 1926).

Building upon the idea of naturally occurring standing wave patterns in the brain, (Pang et al. 2023) demonstrated that brain harmonic waves can alternatively be constructed from the geometry of the brain. More specifically, they derive the eigenvalues underlying harmonic waves by solving the eigendecomposition problem on a mesh representation of a population-averaged template of the neocortical surface.

The most compelling evidence in support of the work of (Atasoy, Donnelly, and Pearson 2016) and (Pang et al. 2023) is that their models can accurately predict brain activity at the macroscopic scale. Empirically, (Pang et al. 2023) reconstructed activity data of 255 brains from the Human Connectome Project (HCP) (Van Essen et al. 2013) as a weighted sum of the eigenvectors derived by either the surface mesh (as in the geometry-based model) or the human connectome graph (as in the CSHW model). Using their geometry-based model, they reported a correlation between empirical and reconstructed data of 0.38 with only ten eigenvectors, and above 0.8 when using approximately 100 eigenvectors. Results using the CSHW model were similarly accurate although slightly inferior.

It is important to note that the researchers reconstructed brain activity using only the eigenvectors derived from either the brain surface mesh or connectivity graph, thus independent from the activation data. In light of this, the resulting correlations are striking as the evidence that brain-wide activity can be predicted by geometry-based models (Pang et al. 2023) contradicts long-standing theories of brain information flow based on connectivity between cortical regions (Honey et al. 2009). Further, the recording of nearly 0.4 correlation between brain-wide dynamics and merely 10 geometric eigenmodes also suggests a tight coupling between shape and vibration, and perhaps ultimately, spatial and temporal domains of activity.

In terms of identifying information in the brain, differently from the stimuli-based methods described in the previous sections, harmonic waves theories do not aim to elucidate what information a certain brain recording contains but rather how information flows through the brain as a whole. They postulate that the brain organises itself using standing wave patterns of different frequencies that distribute different information. However, to further back the theory of brain harmonics, QRI is investigating using phenomenal stimuli such as strobing lights, tactile and auditory input to see whether simple and clear physical vibrations manifest as sparse and clean geometric eigenmodes in brain signals (Volpato et al. 2023; McGowan et al. 2022).

Because of their ability to predict brain activity, harmonic wave models resemble the emerging framework of neural encoding (Holdgraf et al. 2017). However, instead of predicting brain activity from a stimulus, they use whole-brain properties such as shape or connectivity. One limitation of encoding frameworks such as harmonic wave models is that they often do not use standardised evaluations procedures. For example, it is not clear whether the amplitude parameters that (Pang et al. 2023) use to fit their eigenvectors to the empirical data require the use of test data and thus introducing a statistical leak in their method. As neural encoding advances to make predictions that shape computational theories, it would be helpful for the field to adopt standard evaluation methods, similar to other computational fields (Thiyagalingam et al. 2022).

Brain reading in the wild

An alternative way of investigating brain-wide oscillations is to attempt to exploit their information content. Brain-computer interfaces (BCIs) turn human neural activity into commands to control devices like prosthetic limbs and cursors or to diagnose phenomena such as epileptic seizures or sleep quality (Hossain et al. 2023).

BCIs employ the opposite mechanism of neural encoding: decoding models that leverage brain activity to predict or control the external environment and can inform whether a given information exists in the brain and can be exploited. In recent years, BCIs reached high degrees of accuracy (i.e. above 90% on most existing benchmarks) and significant advances in practical applications (Hossain et al. 2023). It is noteworthy that most BCI algorithms make no use of existing findings from cognitive neuroscience (Ritchie, Kaplan, and Klein 2019). This is further evidence that past experimental paradigms in cognitive neuroscience did not succeed in generating understanding of brain information that is reliable and exploitable.

However, a significant limitation of decoding models is that while they exploit information in a practically reliable way, understanding what information they exploit and how is often difficult (Kriegeskorte and Douglas 2019). While this may equip us with devices that solve real world problems, it may still fail to advance our understanding of brain mechanics. While growing algorithmic sophistication and computational capabilities are increasing our ability to reproduce complex systems, the challenge of interpreting them is growing at a comparable pace both in the field of neuroscience and beyond (Samek et al. 2021). At present, several research programs are investigating neural decoding systems that can both support practical applications as well as legibility over the functioning of the measured brain signals. For example, some consider Max Tegmark’s symbolic regression (Angelis, Sofos, and Karakasidis 2023) as a possible mechanism to develop more effective and intuitive interfaces, leading also to improved understanding of how the brain functions.

Conclusion

Cognitive neuroscience emerged as a response to the behaviourist dictum that understanding what happens inside the human brain is an impossible task, in turn, this led to the development of a multitude of ways of measuring neurological activity.

However, a large portion of the interpretations of such measurements have been overconfident in establishing the underlying information contained in them (Boekel et al. 2015; Poldrack et al. 2017). We can see evidence of the premature nature of such interpretations in how they have been revised by subsequent studies and failed to inform principles of whole brain functioning and practical applications. This is not to say that research investigating neural correlates is not useful but rather that ambitious explanations of what information such brain activity represents may not be truthful. Especially without taking into account the context of the information and whether we are seeing ourselves, implicitly, as the receivers and interpreters of the person’s brain signals, rather than making sense of them in context.

As neuroscience matures, novel ways of identifying information content of neural phenomena emerge. Methods for quantifying information representation in neuroimaging provide more details on the relationships between activations and stimuli or behaviour but struggle to scale to high-dimensional and brain-wide considerations. Whole brain model-centric paradigms (Devezer and Buzbas 2023) aim to identify the sources of generalizability but they are often difficult to evaluate and compare. Finally, decoding models of brain wide activity can power practically impactful applications but they are difficult to interpret and distil into conveyable knowledge. Looking ahead, growing interdisciplinary collaborations and the development of shared representational considerations could help us leverage different approaches to overcome their limitations and triangulate conclusions on how the brain implements the mind.

Tags

Neuroscience, Cognitive Science, Neural Correlates, CSHW, Computational Neuroscience, Oscillations, Eigenvalues, Eigenvectors, Standing Waves, Self-Organizing Systems, Whole-Brain Dynamics